Le monde des services congnitifs est en plein expansion. Azure propose un service comme ça : Cognitives Services. Le problème, c’est que le traitement de l’image est fait par Azure. Il faut envoyer l’image et le traitement est payant.

Il existe des alternatives open-source comme OpenCV 4.0. les bindings sont en C#, Java et Python.

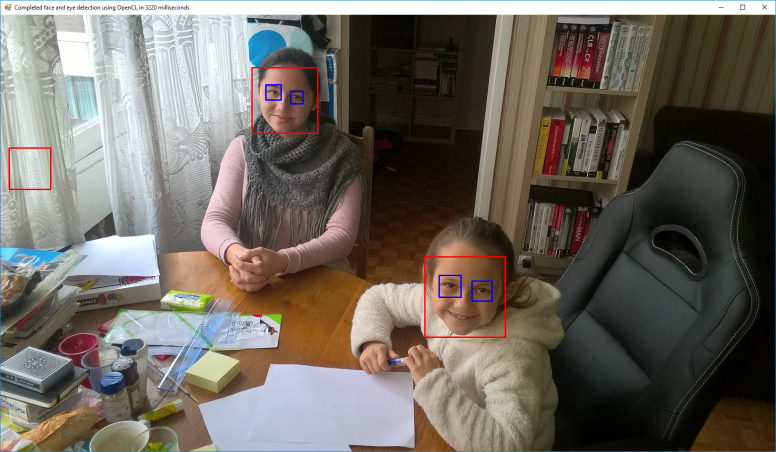

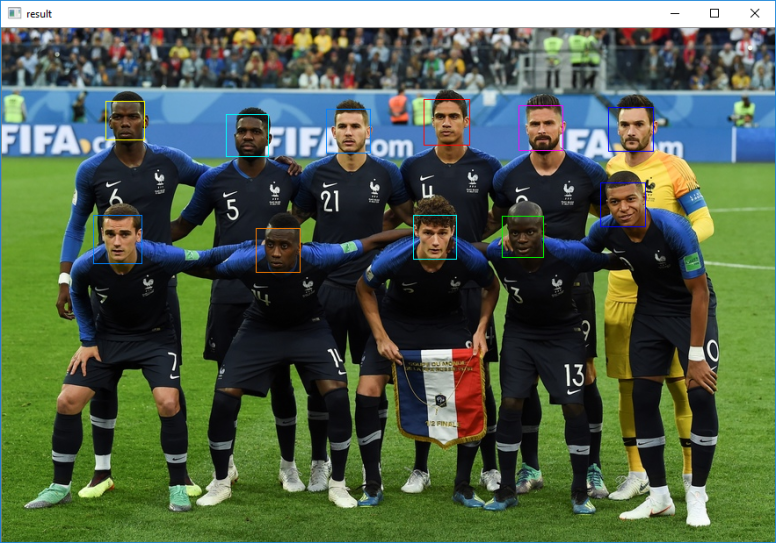

Voici quelques exemples:

Vous vous demandez si le code est complexe ?

En C#:

using System; using System.Collections.Generic; using System.Diagnostics; using System.Drawing; using System.Runtime.InteropServices; using System.Windows.Forms; using Emgu.CV; using Emgu.CV.CvEnum; using Emgu.CV.Structure; using Emgu.CV.UI; using Emgu.CV.Cuda; namespace FaceDetection { static class Program { /// /// The main entry point for the application. ///

[STAThread] static void Main() { Application.EnableVisualStyles(); Application.SetCompatibleTextRenderingDefault(false); Run(); } static void Run() { IImage image; //Read the files as an 8-bit Bgr image image = new UMat("Attach01.jpg", ImreadModes.Color); //UMat version //image = new Mat("lena.jpg", ImreadModes.Color); //CPU version long detectionTime; List faces = new List(); List eyes = new List(); DetectFace.Detect( image, @"data\haarcascades\haarcascade_frontalface_default.xml", @"data\haarcascades\haarcascade_eye.xml", faces, eyes, out detectionTime); foreach (Rectangle face in faces) CvInvoke.Rectangle(image, face, new Bgr(Color.Red).MCvScalar, 2); foreach (Rectangle eye in eyes) CvInvoke.Rectangle(image, eye, new Bgr(Color.Blue).MCvScalar, 2); //display the image using (InputArray iaImage = image.GetInputArray()) ImageViewer.Show(image, String.Format( "Completed face and eye detection using {0} in {1} milliseconds", (iaImage.Kind == InputArray.Type.CudaGpuMat && CudaInvoke.HasCuda) ? "CUDA" : (iaImage.IsUMat && CvInvoke.UseOpenCL) ? "OpenCL" : "CPU", detectionTime)); } } } using System; using System.Collections.Generic; using System.Diagnostics; using System.Drawing; using Emgu.CV; using Emgu.CV.Structure; #if !(__IOS__ || NETFX_CORE) using Emgu.CV.Cuda; #endif namespace FaceDetection { public static class DetectFace { public static void Detect( IInputArray image, String faceFileName, String eyeFileName, List faces, List eyes, out long detectionTime) { Stopwatch watch; using (InputArray iaImage = image.GetInputArray()) { #if !(__IOS__ || NETFX_CORE) if (iaImage.Kind == InputArray.Type.CudaGpuMat && CudaInvoke.HasCuda) { using (CudaCascadeClassifier face = new CudaCascadeClassifier(faceFileName)) using (CudaCascadeClassifier eye = new CudaCascadeClassifier(eyeFileName)) { face.ScaleFactor = 1.1; face.MinNeighbors = 10; face.MinObjectSize = Size.Empty; eye.ScaleFactor = 1.1; eye.MinNeighbors = 10; eye.MinObjectSize = Size.Empty; watch = Stopwatch.StartNew(); using (CudaImage<Bgr, Byte> gpuImage = new CudaImage<Bgr, byte>(image)) using (CudaImage<Gray, Byte> gpuGray = gpuImage.Convert<Gray, Byte>()) using (GpuMat region = new GpuMat()) { face.DetectMultiScale(gpuGray, region); Rectangle[] faceRegion = face.Convert(region); faces.AddRange(faceRegion); foreach (Rectangle f in faceRegion) { using (CudaImage<Gray, Byte> faceImg = gpuGray.GetSubRect(f)) { //For some reason a clone is required. //Might be a bug of CudaCascadeClassifier in opencv using (CudaImage<Gray, Byte> clone = faceImg.Clone(null)) using (GpuMat eyeRegionMat = new GpuMat()) { eye.DetectMultiScale(clone, eyeRegionMat); Rectangle[] eyeRegion = eye.Convert(eyeRegionMat); foreach (Rectangle e in eyeRegion) { Rectangle eyeRect = e; eyeRect.Offset(f.X, f.Y); eyes.Add(eyeRect); } } } } } watch.Stop(); } } else #endif { //Read the HaarCascade objects using (CascadeClassifier face = new CascadeClassifier(faceFileName)) using (CascadeClassifier eye = new CascadeClassifier(eyeFileName)) { watch = Stopwatch.StartNew(); using (UMat ugray = new UMat()) { CvInvoke.CvtColor(image, ugray, Emgu.CV.CvEnum.ColorConversion.Bgr2Gray); //normalizes brightness and increases contrast of the image CvInvoke.EqualizeHist(ugray, ugray); //Detect the faces from the gray scale image and store the locations as rectangle //The first dimensional is the channel //The second dimension is the index of the rectangle in the specific channel Rectangle[] facesDetected = face.DetectMultiScale( ugray, 1.1, 10, new Size(20, 20)); faces.AddRange(facesDetected); foreach (Rectangle f in facesDetected) { //Get the region of interest on the faces using (UMat faceRegion = new UMat(ugray, f)) { Rectangle[] eyesDetected = eye.DetectMultiScale( faceRegion, 1.1, 10, new Size(20, 20)); foreach (Rectangle e in eyesDetected) { Rectangle eyeRect = e; eyeRect.Offset(f.X, f.Y); eyes.Add(eyeRect); } } } } watch.Stop(); } } detectionTime = watch.ElapsedMilliseconds; } } } }

En C++:

#ifndef PCH_H #define PCH_H // TODO: add headers that you want to pre-compile here #include "opencv2/objdetect.hpp" #include "opencv2/highgui.hpp" #include "opencv2/imgproc.hpp" #include <iostream> using namespace std; using namespace cv; #pragma comment(lib, "opencv_world400d.lib") #endif //PCH_H // ConsoleApplication1.cpp : This file contains the 'main' function. Program execution begins and ends there. // #include "pch.h" string cascadeName; string nestedCascadeName; void detectAndDraw(Mat& img, CascadeClassifier& cascade, CascadeClassifier& nestedCascade, double scale, bool tryflip); int main(int argc, const char** argv) { VideoCapture capture; Mat frame, image; string inputName; bool tryflip; CascadeClassifier cascade, nestedCascade; double scale; cascadeName = "..\\data\\haarcascades\\haarcascade_frontalface_alt.xml"; nestedCascadeName = "..\\data\\haarcascades\\haarcascade_eye_tree_eyeglasses.xml"; scale = 1.3; inputName = "group3.jpg"; tryflip = false; if (!nestedCascade.load(samples::findFileOrKeep(nestedCascadeName))) { cerr << "WARNING: Could not load classifier cascade for nested objects" << endl; return 1; } if (!cascade.load(samples::findFile(cascadeName))) { cerr << "ERROR: Could not load classifier cascade" << endl; return 1; } image = imread(samples::findFileOrKeep(inputName), IMREAD_COLOR); if (image.empty()) { if (!capture.open(samples::findFileOrKeep(inputName))) { cout << "Could not read " << inputName << endl; return 1; } } cout << "Detecting face(s) in " << inputName << endl; if (!image.empty()) { detectAndDraw(image, cascade, nestedCascade, scale, tryflip); waitKey(0); } return 0; } void detectAndDraw(Mat& img, CascadeClassifier& cascade, CascadeClassifier& nestedCascade, double scale, bool tryflip) { double t = 0; vector<Rect> faces, faces2; const static Scalar colors[] = { Scalar(255,0,0), Scalar(255,128,0), Scalar(255,255,0), Scalar(0,255,0), Scalar(0,128,255), Scalar(0,255,255), Scalar(0,0,255), Scalar(255,0,255) }; Mat gray, smallImg; cvtColor(img, gray, COLOR_BGR2GRAY); double fx = 1 / scale; resize(gray, smallImg, Size(), fx, fx, INTER_LINEAR_EXACT); equalizeHist(smallImg, smallImg); t = (double)getTickCount(); cascade.detectMultiScale(smallImg, faces, 1.1, 2, 0 //|CASCADE_FIND_BIGGEST_OBJECT //|CASCADE_DO_ROUGH_SEARCH | CASCADE_SCALE_IMAGE, Size(30, 30)); if (tryflip) { flip(smallImg, smallImg, 1); cascade.detectMultiScale(smallImg, faces2, 1.1, 2, 0 //|CASCADE_FIND_BIGGEST_OBJECT //|CASCADE_DO_ROUGH_SEARCH | CASCADE_SCALE_IMAGE, Size(30, 30)); for (vector<Rect>::const_iterator r = faces2.begin(); r != faces2.end(); ++r) { faces.push_back(Rect(smallImg.cols - r->x - r->width, r->y, r->width, r->height)); } } t = (double)getTickCount() - t; printf("detection time = %g ms\n", t * 1000 / getTickFrequency()); for (size_t i = 0; i < faces.size(); i++) { Rect r = faces[i]; Mat smallImgROI; vector<Rect> nestedObjects; Point center; Scalar color = colors[i % 8]; int radius; double aspect_ratio = (double)r.width / r.height; if (0.75 < aspect_ratio && aspect_ratio < 1.3) { center.x = cvRound((r.x + r.width*0.5)*scale); center.y = cvRound((r.y + r.height*0.5)*scale); radius = cvRound((r.width + r.height)*0.25*scale); circle(img, center, radius, color, 3, 8, 0); } else rectangle(img, Point(cvRound(r.x*scale), cvRound(r.y*scale)), Point(cvRound((r.x + r.width - 1)*scale), cvRound((r.y + r.height - 1)*scale)), color, 3, 8, 0); if (nestedCascade.empty()) continue; smallImgROI = smallImg(r); nestedCascade.detectMultiScale(smallImgROI, nestedObjects, 1.1, 2, 0 //|CASCADE_FIND_BIGGEST_OBJECT //|CASCADE_DO_ROUGH_SEARCH //|CASCADE_DO_CANNY_PRUNING | CASCADE_SCALE_IMAGE, Size(30, 30)); for (size_t j = 0; j < nestedObjects.size(); j++) { Rect nr = nestedObjects[j]; center.x = cvRound((r.x + nr.x + nr.width*0.5)*scale); center.y = cvRound((r.y + nr.y + nr.height*0.5)*scale); radius = cvRound((nr.width + nr.height)*0.25*scale); circle(img, center, radius, color, 3, 8, 0); } } imshow("result", img); }

Comme vous pouvez le constater, il est facile de mettre en oeuvre des services techniques performants sans payer.